|

For Orbital Resonance, we researched and experimented with an interactive light design, created by our own Infrared (IR) Camera Tracking System.

During our process, we used different cameras, filters, and theater lights to create an optimal motion-tracking system. We tested different software platforms like MAX/MSP/Jitter and VVVV to manipulate the data from our movements in the interactive space. The following information reveals our solutions in creating the IR light setting for the IR tracking to activate our environment. |

|

|

|

Light Followers

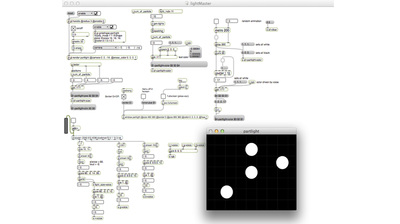

As the audience and performers enter the “stage” (active area), the space is pitch black to create an intimate setting. The only stimuli present are the sounds of the performers’ breathe, spatialized through the speakers coming from above, around, and below the various surfaces. After a period of time, the vocal and breathing improvisation intensifies and a projection appears for each individual following them around the space. It starts simple and clean with just a projected blob (blurred - no sharp edges) as if it was an actual theatre spotlight. As the performers continue improvising (talking, mumbling and breathing louder or noisier), the lights start trembling to their voices. Eventually, the lights start shifting in size mapped to the performers actions, as if they were beginning to breathe themselves. The lights begin to have their own agency. The bodies in the space create the lights’ presence, but after a while they are manifesting their own character. They will follow the bodies, drift away from the bodies, or freeze in time momentarily. At the end of this phase, the lights slowly shrink and disappear as we move to the next state. |

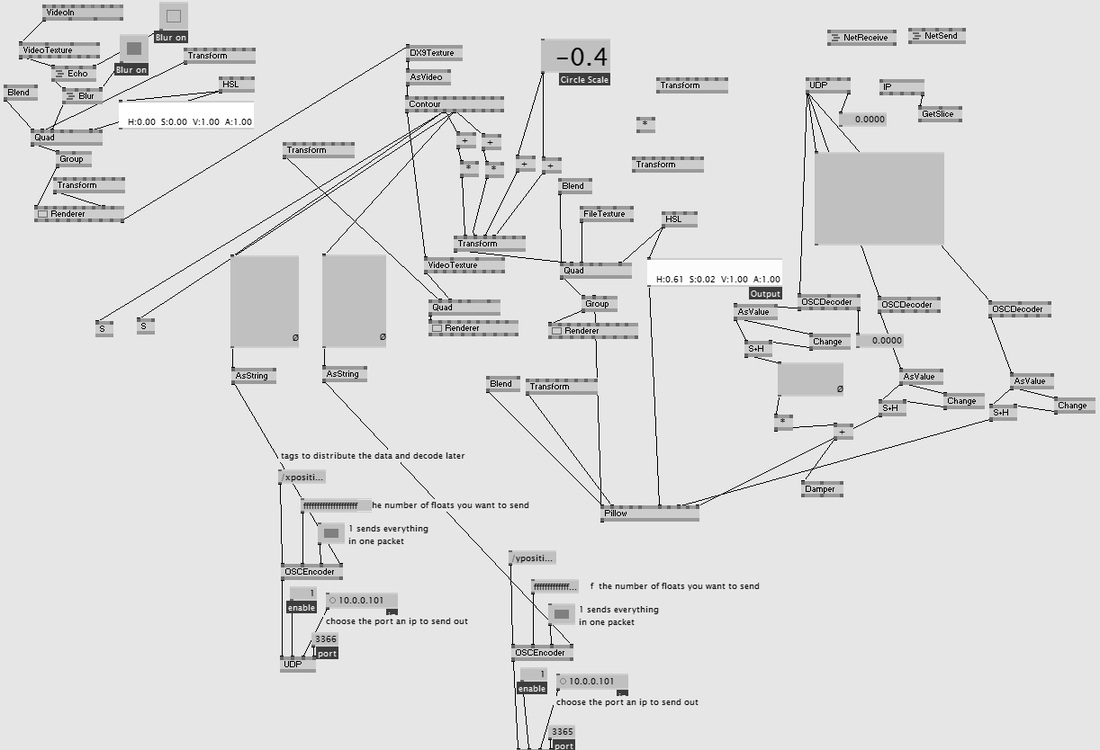

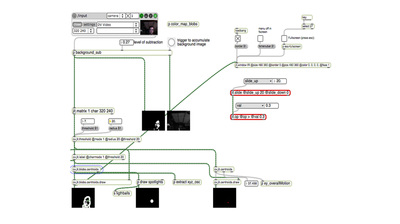

In the image above, we see a screenshot of the patch that was responsible for the body-position tracking (as it is analyzed in the movement section) and for the creation of the light blobs that were used for the theatre-light effect. The effects on the light and the different values were passed through the system within the OSC protocol.

In order to successfully create a full floor projection at Concordia's Hexagram BlackBox, we used one short throw projector. The projector is an Epson PowerLite 460W XGA Multimedia Projector and was placed on the grid at 90 degrees, facing the floor. We covered a 30'x45' area which mapped the area used for tracking the performance space. Another possible solution would be to use moving lights, but given the limited amount of moving lights that we had at our disposal, it was not an option. Our solution worked very well, due to the fact that we were only projecting low-resolution blobs to create moving lights. If we needed a higher resolution image, the solution we have described above would not work. IR Lights For the infrared tracking, we placed four light-stands on each side of the active space facing inwards. There were three lights on each stand, equaling twelve lights for each side. We used the following recipe to create the best possible IR filters for the space: |

|

Roscolux: Two #19 ("fire") + #83 (med. blue) + #90 (dk. yel. grn.)

|

|

We placed the filters in that order with the red layer facing towards the space.

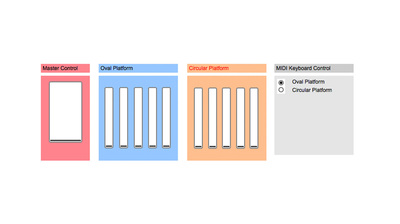

Platform Lights We experimented with different shapes and materials in order to have the best sonic result and light effect. The two platforms each contained a surface transducer placed underneath the structure alongside with 8 different light bulbs and DMX controlled Light box. We mapped different physiological states of the performer as well as the different states of the space in the lights and vibrations, shifting the way the objects were perceived by the audience. |